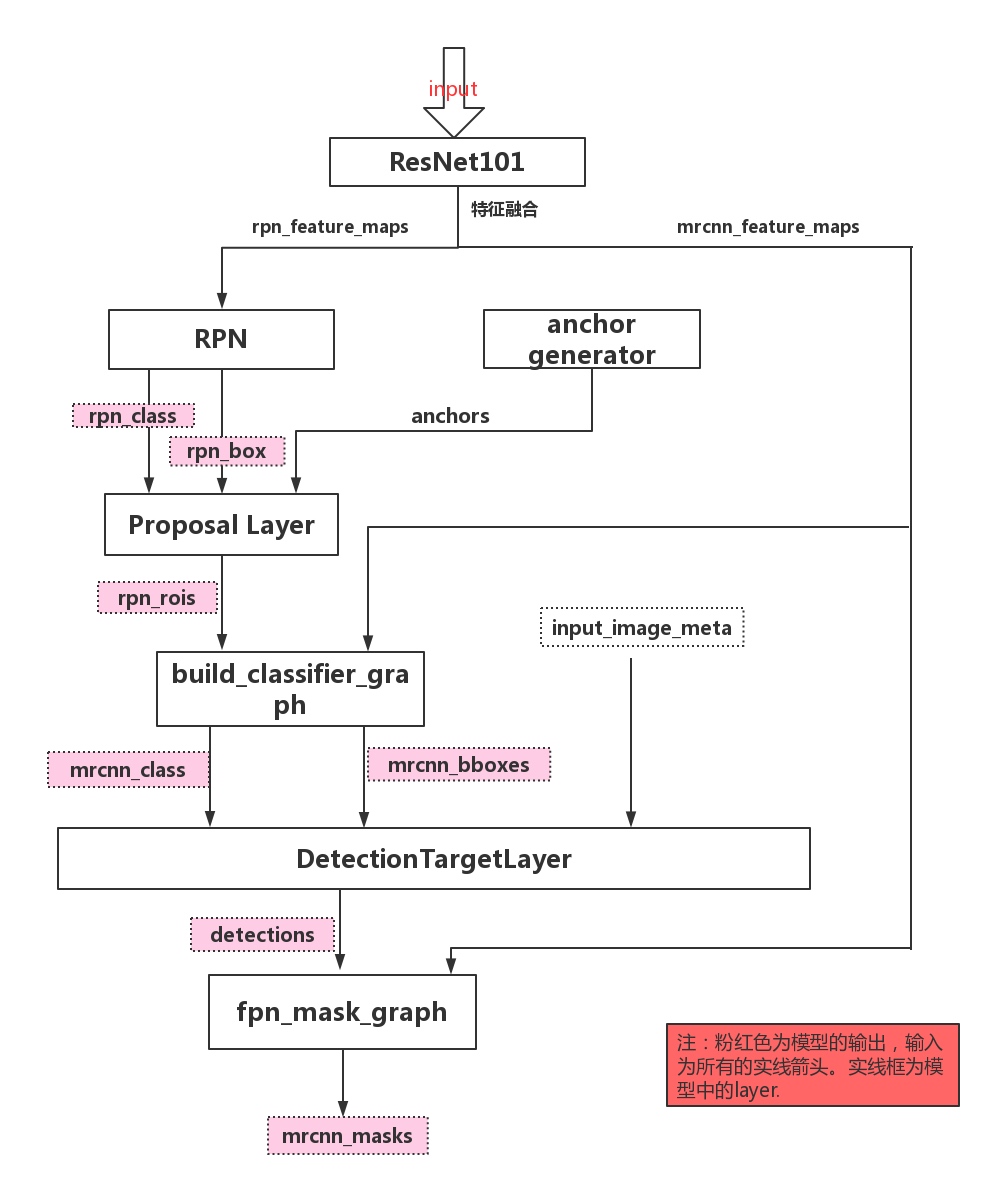

BackBone :

- ResNet and FPN FPN的code其實作者寫在了model.py的Class MaskRCNN裡面並沒有獨立出來, 而其餘ResNet有

Region Proposal Network:

- Anchor Boxes Generate 產生anchor boxes的code

- RPN RPN網路架構的code

- Proposal Layer 將Anchor Boxes Generate與RPN結合的部分並會經過NMS

TASK_HEAD:

- FPN Classifier Graph 有關於ROI Align Layer還有分類的網路架構的部分

- Build FPN Mask Graph 有關於ROI Align Layer還有Mask的網路架構的部分

- Detection Layer FPN Classifier Graph後,按照confidence還有數量篩選, 算是後處理了

- Detection Target Layer Proposal Layer後,Training的code

- Mask RCNN Loss有關於所有的loss

如果是training的話

|

|

如果是測試的話

else:

# Network Heads

# Proposal classifier and BBox regressor heads

mrcnn_class_logits, mrcnn_class, mrcnn_bbox =\

fpn_classifier_graph(rpn_rois, mrcnn_feature_maps, input_image_meta,

config.POOL_SIZE, config.NUM_CLASSES,

train_bn=config.TRAIN_BN)

# Detections

# output is [batch, num_detections, (y1, x1, y2, x2, class_id, score)] in

# normalized coordinates

detections = DetectionLayer(config, name="mrcnn_detection")(

[rpn_rois, mrcnn_class, mrcnn_bbox, input_image_meta])

# Create masks for detections

detection_boxes = KL.Lambda(lambda x: x[..., :4])(detections)

mrcnn_mask = build_fpn_mask_graph(detection_boxes, mrcnn_feature_maps,

input_image_meta,

config.MASK_POOL_SIZE,

config.NUM_CLASSES,

train_bn=config.TRAIN_BN)

model = KM.Model([input_image, input_image_meta, input_anchors],

[detections, mrcnn_class, mrcnn_bbox,

mrcnn_mask, rpn_rois, rpn_class, rpn_bbox],

name='mask_rcnn')

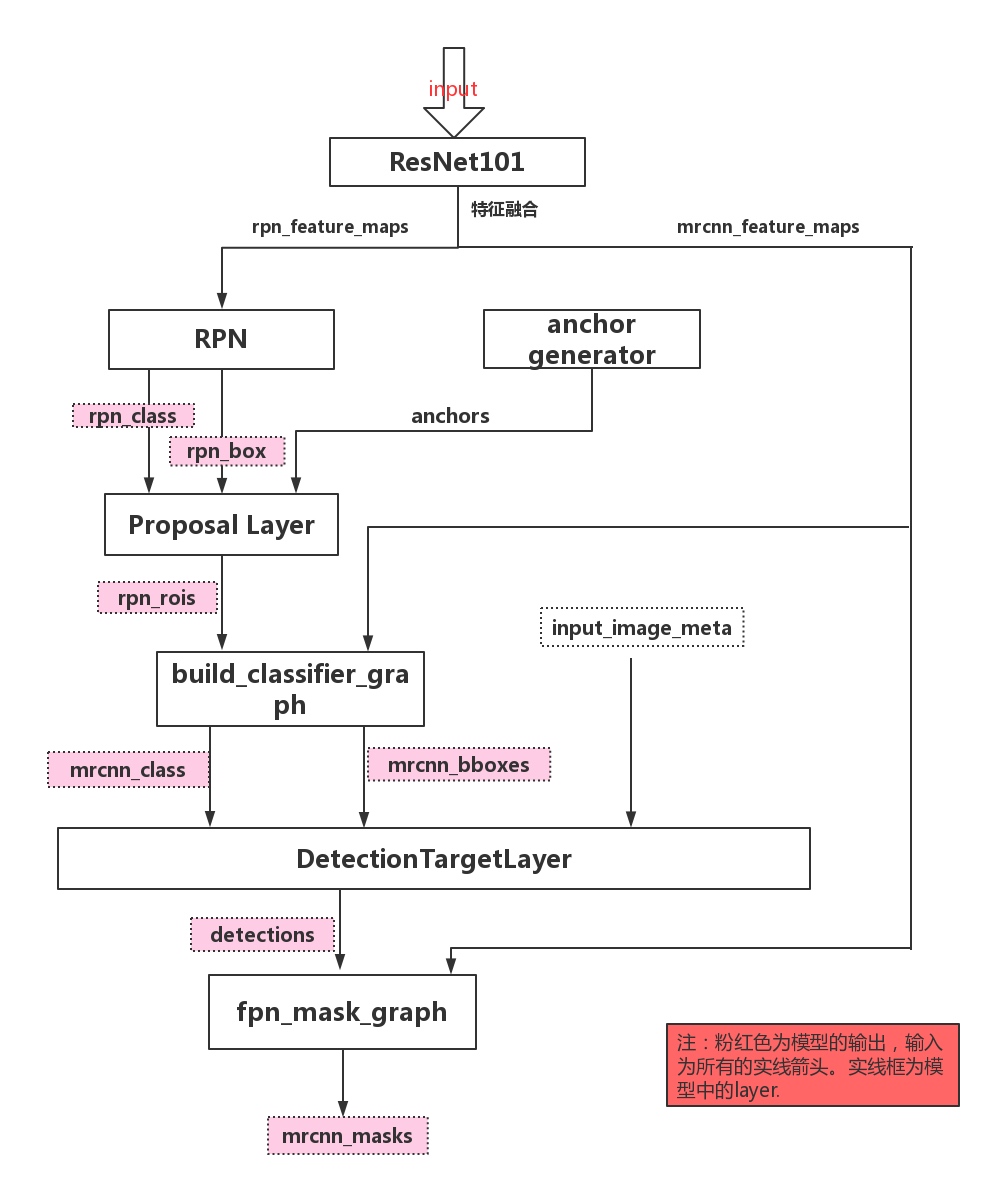

以下是別人網誌上的舊圖 不太依樣了 build_classfier_grpah拿掉了 上圖是inference下圖是train